8 Quick Tips about A/B Split Testing

When you’re creating the copy for marketing emails or landing pages, you always wonder how readers are going to respond, right? You wonder if this will this be the email that gets the most opens and clicks or if, instead, people will be turned off by the subject line that is just a bit too much like the dreaded clickbait. (And yes, in true clickbait fashion, the blog title for this post almost became 8 Ways You’re Doing A/B Testing Wrong, and #4 Will Surprise You!” But I refrained.)

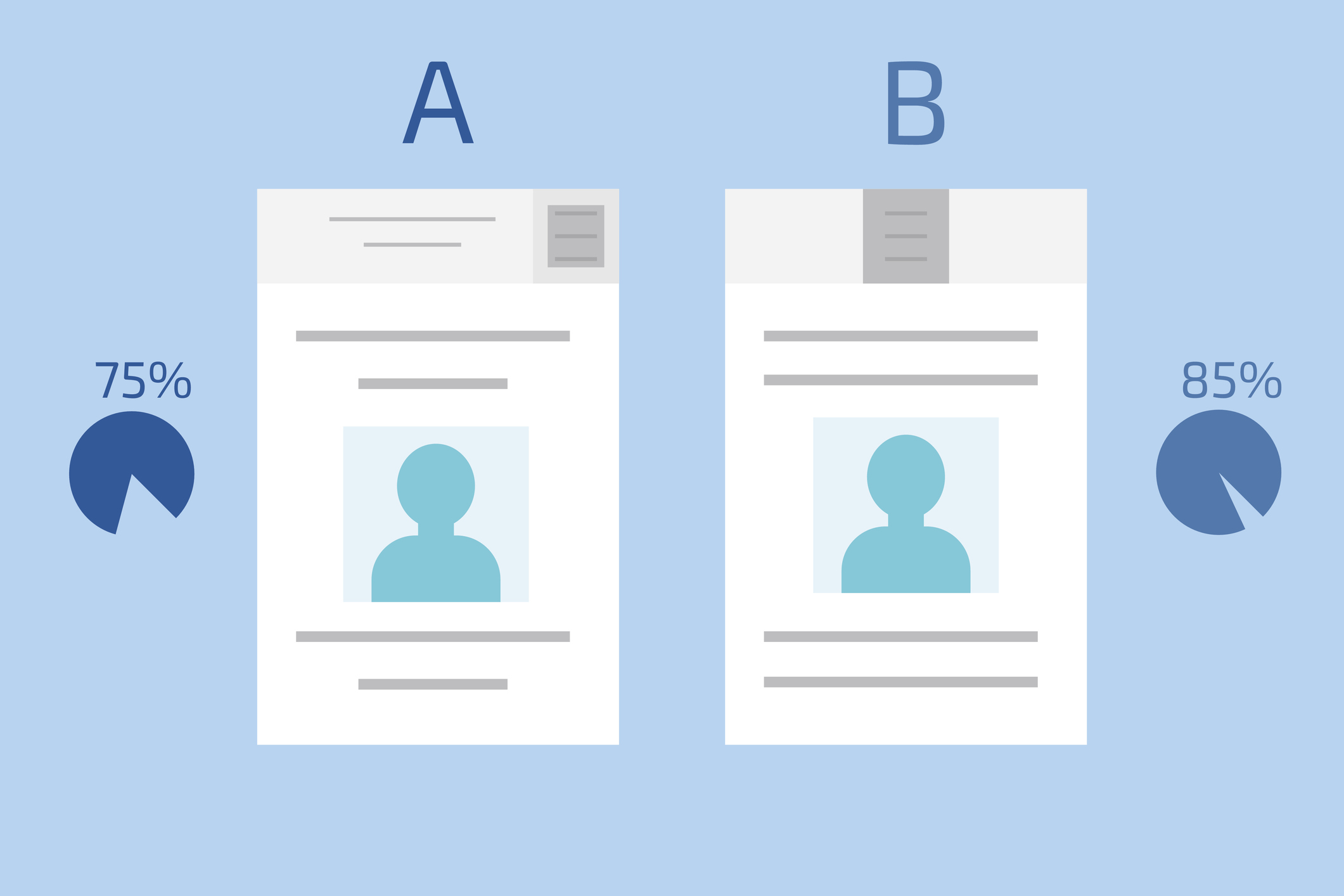

How can you figure out what works for your readers and, sometimes more important, what doesn’t resonate with them? A/B split testing is here to help. Read on for eight tips and maybe one or more of them actually WILL surprise you.

- One Test to Rule Them All. There really can and should only be one thing that you’re testing in an A/B split test. Just one. Otherwise, you’re not going to know which difference made someone click among all the other possibilities.

- Take Control. When you’re doing an A/B split test, you should have a “control” version, which is what you normally do for the email or landing page, and then the “test” version, which is what you’re testing to see if there’s improvement.

- Timing Is Everything. To do a proper A/B split test, you can’t have the control version go out on, say, a Tuesday of one week and the test version go out on Friday—unless you’re testing which day performs better for sending, in which case have at it! If you aren’t testing the day of send, though, you won’t know if it’s the different day that causes the change in conversions or the actual element you’re testing itself. Send them out the same day and essentially at the same time.

- Email Subject Lines: These are by far the easiest thing to test. Just take two versions of the exact same email, and send it with one subject line to half of your audience and with another subject line to the other half. I’ve used this many times in the past to see what caused readers to take action. One subject line I tested was a top fear for managers everywhere. The original subject line was “How To Retain Your Top IT Employees” and the test subject line read, “6 Reasons Your Top IT Employees Will Quit.” The test mailing generated a 32% increase in clicks and a 33% increase in registrations over the original one. So, maybe there is a point to clickbait.

- Headlines. This follows the same idea as the subject lines above. You can also test if having a question as a headline performs better or worse than a statement. Or if touting the benefits versus the cons to avoid by using a product gets a higher conversion. Of course, you can also test for creativity versus the tried and true.

- Images. Wondering if you should use a picture of your product on your landing page or if a pic of a smiling person will perform better? A/B split test, stat! The image used on a landing page or in an email is another common A/B split test to ascertain which one will generate more interest.

- Click The Button. Where you place your call-to-action button on a landing page or what color it is can truly make a difference in conversions. Try placing your CTA button on the right-hand side of the page in one test and on the left-hand side of the page in another.

- Color Your World. Or try changing the color of your CTA button. Simply changing the button from green to orange can have an upswing. Seriously. Unbounce tested this and found that orange is their new go-to for CTA buttons. Who knew?

Of course, the old saying goes that your mileage may vary, and it’s true. Test these out for yourself and let us know how your A/B split tests go!

Leave a comment